When stepping into the world of Amazon Web Services, mastering the AWS Command Line Interface (CLI) can appear to be a challenging task at first glance. However, with a step-by-step approach, this task becomes not only achievable but also a gateway to more efficient cloud service management. This article aims to guide you through the initial setup of the AWS CLI and then expand into the more advanced territory of executing AWS Glue commands and automation, ensuring that by the end, managing AWS services from the command line feels within reach.

Setting Up AWS CLI

Setting Up AWS CLI for the First Time

The AWS CLI (Amazon Web Services Command Line Interface) is a powerful tool that allows you to interact with AWS services directly from the command line. Setting it up for the first time might seem daunting, but by following these straightforward steps, you’ll be up and running in no time.

Step 1: Download and Install the AWS CLI

Firstly, visit the official AWS CLI website. Choose the version compatible with your operating system (Windows, macOS, or Linux). Download and run the installer. For macOS and Linux users, you might need to use a terminal command to complete the installation. Follow the prompts until the installation is successful.

Step 2: Create an IAM User

For security reasons, it’s better not to use your AWS root account. Instead, navigate to the AWS Management Console, access the IAM (Identity and Access Management) section, and create a new IAM user. Give the user Programmatic access, which provides an access key ID and secret access key necessary for the CLI. Assign the necessary permissions or attach a policy directly to the user, depending on your requirements.

Step 3: Configure the AWS CLI

With your access key ID and secret access key ready, open a terminal (or command prompt in Windows). Enter the following command:

aws configure

You’ll then be prompted to input your access key ID, secret access key, default region name (found in the AWS regions list), and output format (json is recommended). Once you have filled in these details, the setup is complete.

Step 4: Test Your Setup

To ensure everything is set up correctly, run a simple AWS CLI command to list all S3 buckets:

aws s3 ls

If the setup is successful, you will see a list of S3 buckets in your AWS account. If an error occurs, review the previous steps to ensure all details were entered correctly.

The AWS CLI is now ready for use. With it, you can effortlessly manage your AWS services directly from the command line, streamline your workflow, and automate tasks through scripts.

Remember, always keep your access keys confidential to prevent unauthorized access to your AWS resources. Happy exploring with AWS CLI!

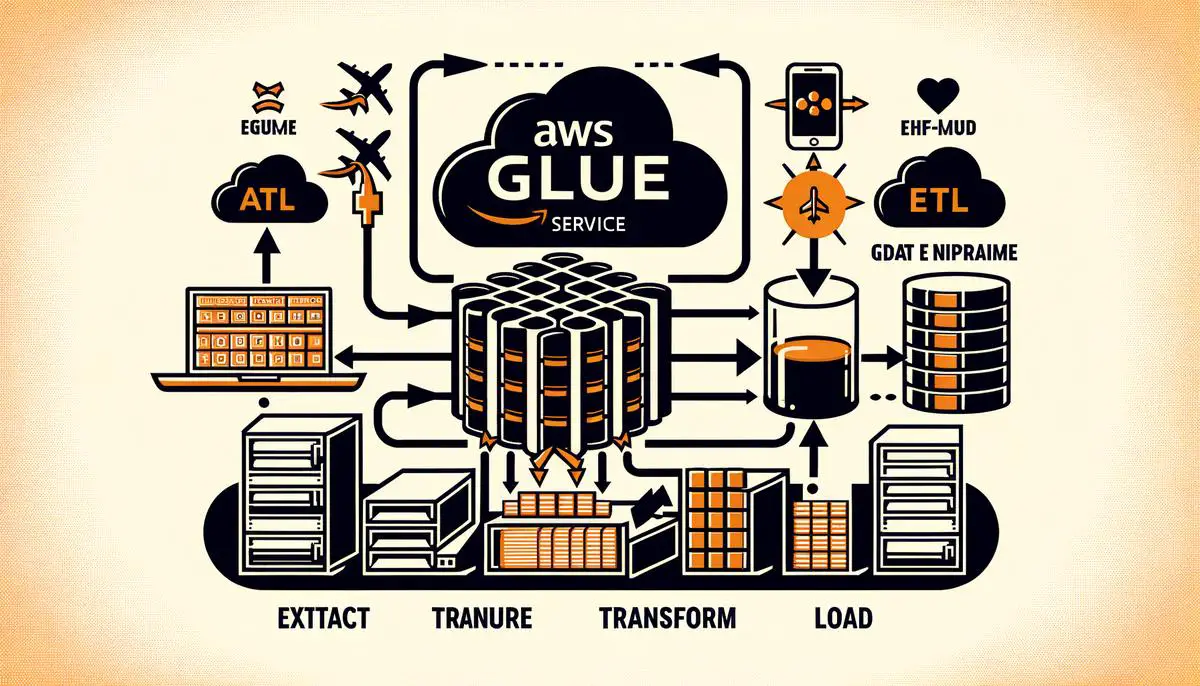

Executing AWS Glue Commands

Executing Basic AWS Glue Commands Using the CLI

Step 5: Understanding AWS Glue Basics

AWS Glue is a fully managed extract, transform, and load (ETL) service that makes it straightforward to prepare and load your data for analytics. Before diving into commands, familiarize yourself with two key concepts in AWS Glue: Crawlers and Jobs. Crawlers are used to scan your data and create metadata tables, whereas Jobs are the ETL scripts that transform, combine, and enrich your data.

Step 6: Running a Crawler

To start, you need to run a crawler that scans your data source and creates a metadata table in the AWS Glue Data Catalog. Use the following command, substituting my-crawler with the name of your crawler:

aws glue start-crawler --name my-crawler

Checking Crawler Status:

After initiating your crawler, check its status to ensure it completes successfully:

aws glue get-crawler-metrics --crawler-name-list my-crawler

This command yields metrics, including time taken and whether the crawler is still active.

Step 7: Executing an ETL Job

With your data cataloged, the next step involves transforming your data with an ETL Job. Assuming you’ve already built your job in AWS Glue, execute it using:

aws glue start-job-run --job-name my-first-job

Replace my-first-job with your specific job name.

Monitoring Job Progress:

Track your job’s progress to ensure it’s running as expected:

aws glue get-job-run --job-name my-first-job --run-id jr_123456789

Here, –run-id is the unique identifier for the job run you wish to monitor. Replace jr_123456789 with the actual run ID.

Step 8: Reviewing ETL Job Output

After job completion, it’s critical to review the output. While specific steps vary depending on your job’s configuration, generally, you’ll navigate to your designated S3 bucket or database to validate the results.

Best Practices:

- Regularly review your AWS Glue scripts and optimize them for efficiency.

- Monitor job logs for errors and insights into job execution performance.

- Use AWS CloudWatch for detailed metrics and to set alarms on your AWS Glue Jobs.

By following these steps and leveraging the AWS Glue service effectively, you can streamline your data ETL processes, making it easier to prepare data for analytics and machine learning tasks.

Scripting with AWS CLI for Glue

Continuing from where the initial guide leaves off, it’s crucial to understand how to automate tasks in AWS Glue using the AWS CLI, amplifying efficiency and streamlining workflows:

Step 5: Scheduling AWS Glue Jobs With the AWS CLI

To automate tasks in AWS Glue, scheduling Jobs is a fundamental task. AWS Glue Jobs are essentially the heart of your ETL (Extract, Transform, Load) process, allowing you to prepare and transform data for analytics.

- Create a Job Script: Before scheduling, ensure you have a script ready for the ETL job. This script informs AWS Glue about the data source, the transformations to apply, and the target destination for the output.

- Command to Create a Job:

aws glue create-job --name "YourJobName" --role "YourIAMRole" --command '{"Name": "glueetl", "ScriptLocation": "s3://YourBucketName/YourScriptLocation", "Language": "python"}' --region YourRegion

Replace “YourJobName”, “YourIAMRole”, “s3://YourBucketName/YourScriptLocation”, and “YourRegion” with your specific details.

- Scheduling the Job: After your job is created, you can schedule it using the AWS CLI’s create-trigger command. This enables the job to run at specified intervals or in response to specific events.

aws glue create-trigger --name "YourTriggerName" --type "SCHEDULED" --schedule "cron(0 12 * * ? *)" --actions '[{"JobName": "YourJobName"}]' --region YourRegion

This example schedules the job to run daily at noon UTC. The schedule format uses cron syntax, allowing for intricate scheduling patterns.

Step 6: Automating Crawler Execution

Beyond ETL jobs, it’s often necessary to automate the execution of Crawlers. AWS Glue Crawlers scan your data sources and update your Data Catalog with metadata.

- Command to Start a Crawler:

aws glue start-crawler --name "YourCrawlerName" --region YourRegion - Creating a Trigger for Your Crawler: Similar to Jobs, you can also create triggers for Crawlers to automate their execution. You may choose to run Crawlers on a schedule or in response to specific AWS events.

aws glue create-trigger --name "YourTriggerNameForCrawler" --type "SCHEDULED" --schedule "cron(0 2 * * ? *)" --actions '[{"CrawlerName": "YourCrawlerName"}]' --region YourRegion

This command sets the Crawler to run daily at 2 a.m. UTC.

Conclusion:

Automating AWS Glue tasks using the CLI simplifies data management processes, making them more efficient and less prone to human error. By following the outlined steps to schedule both ETL Jobs and Crawlers, users can ensure that their data ecosystem is up-to-date and ready for analysis without manual intervention. Incorporating these practices into your workflow not only saves time but also empowers teams to focus on deriving insights and value from their data. Remember, the power of automation lies in its ability to make complex processes manageable and more accessible.

By embracing the AWS CLI and the automation capabilities of AWS Glue as outlined, you unlock a new level of proficiency in managing your cloud resources. These tools not only streamline the data handling processes but also significantly enhance productivity by automating repetitive tasks. With this newfound knowledge and skill set, you are well-equipped to tackle complex data management challenges, enabling you to focus on what truly matters – extracting meaningful insights and value from your data. As technology continues to evolve, staying adept with tools like AWS CLI and AWS Glue ensures a competitive edge in the dynamic field of cloud computing.